Amazon Kinesis Firehose is a fully managed service that can scale automatically with the volume of the data throughput that you are sending to it. It can scale automatically if large quantities of data suddenly appear and then scale back down again. Data can be encrypted and sent to its final destination using the Firehose service.

The following are some key facts to understand about Amazon Kinesis Firehose:

Kinesis Firehose can also transform the data that passes through it before it is delivered to its final destination. An example of this is a log received from a web server. As the Kinesis Data Firehose service ingests the log, it would get the raw data string as shown here:

199.72.81.55 – – [01/Jul/1995:00:00:01 -0400] “GET /history/apollo/ HTTP/1.0” 200 6245

It can then take that raw log file and transform it into a JSON file, such as the one shown here:

{

“verb”: “GET”,

“ident”: “-“,

“bytes”: 6245,

“@timestamp”: “1995-07-01T00:00:01”,

“request”: “GET /history/apollo/ HTTP/1.0”,

“host”: “199.72.81.55”,

“authuser”: “-“,

“@timestamp_utc”: “1995-07-01T04:00:01+00:00”,

“timezone”: “-0400”,

“response”: 200

}

This is especially useful when storing the logs on a service such as Amazon OpenSearch Service. Records in this format can be iterated over and counted for items such as the number of GET requests (versus the number of POST requests); response codes are much more easily tallied, and other metrics can be analyzed quicker and easier since the log has been broken into usable chunks.

One thing to note is that an additional service is available from Amazon Kinesis, which is Kinesis Data Streams. Kinesis Data Streams is used more for analytical processing and needs configuration for the shards. You do not need to know the details of Kinesis Streams for the AWS Security Specialty exam. If you want to learn more about the service, you can visit the following URL: https://packt.link/jz5FP.

With an overview of Kinesis Data Firehose under your belt, you are now ready to look at how you can use the Firehose service to move logs out of CloudWatch Logs to other storage options.

If you have decided that storing your logs on CloudWatch Logs will be cost-prohibitive to your organization, then you need to determine a way to get the logs out of CloudWatch and into your S3 bucket. The Kinesis Data Firehose service can help you accomplish this task; you will see how in the next exercise.

As a prerequisite to this exercise, you should have already created one (or more) CloudWatch Logs groups in your account and have at least one Amazon S3 bucket available for the new Kinesis Data Firehose to deliver the logs:

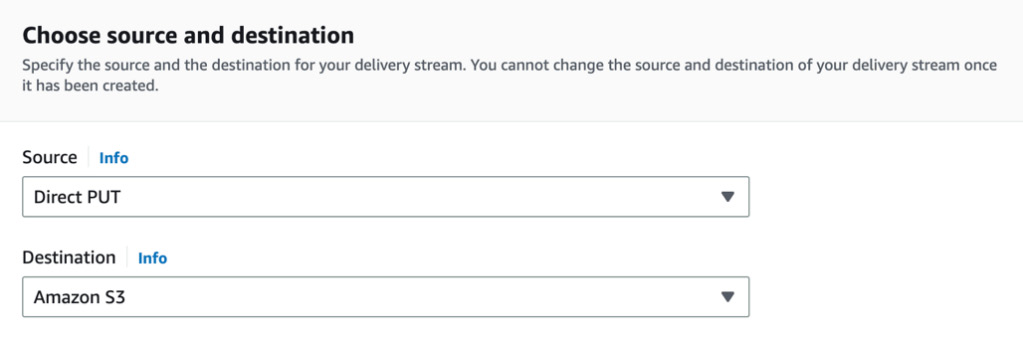

Figure 9.8: Source and Destination selection screen for Firehose

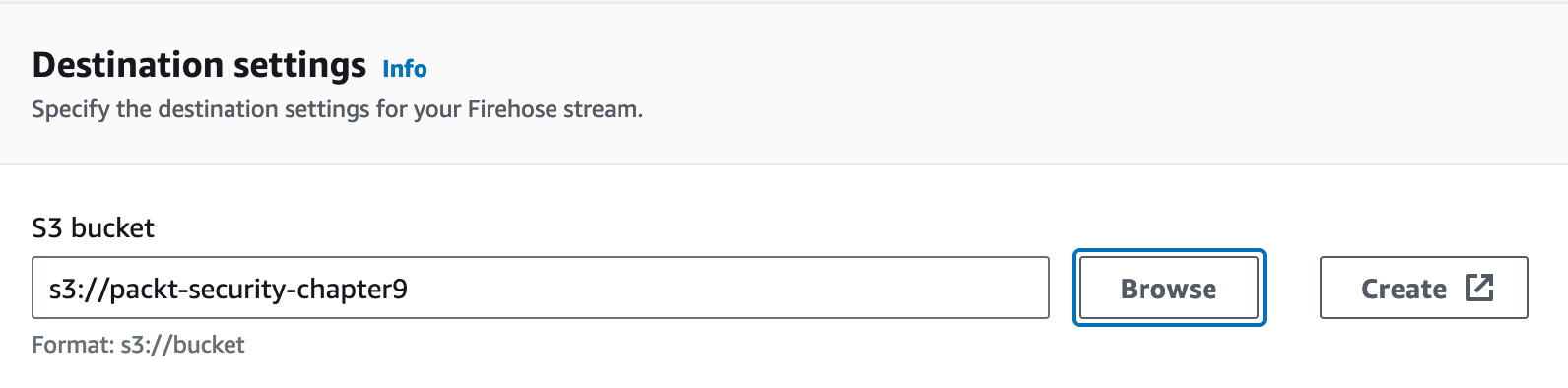

Figure 9.9: Destination settings for Firehose