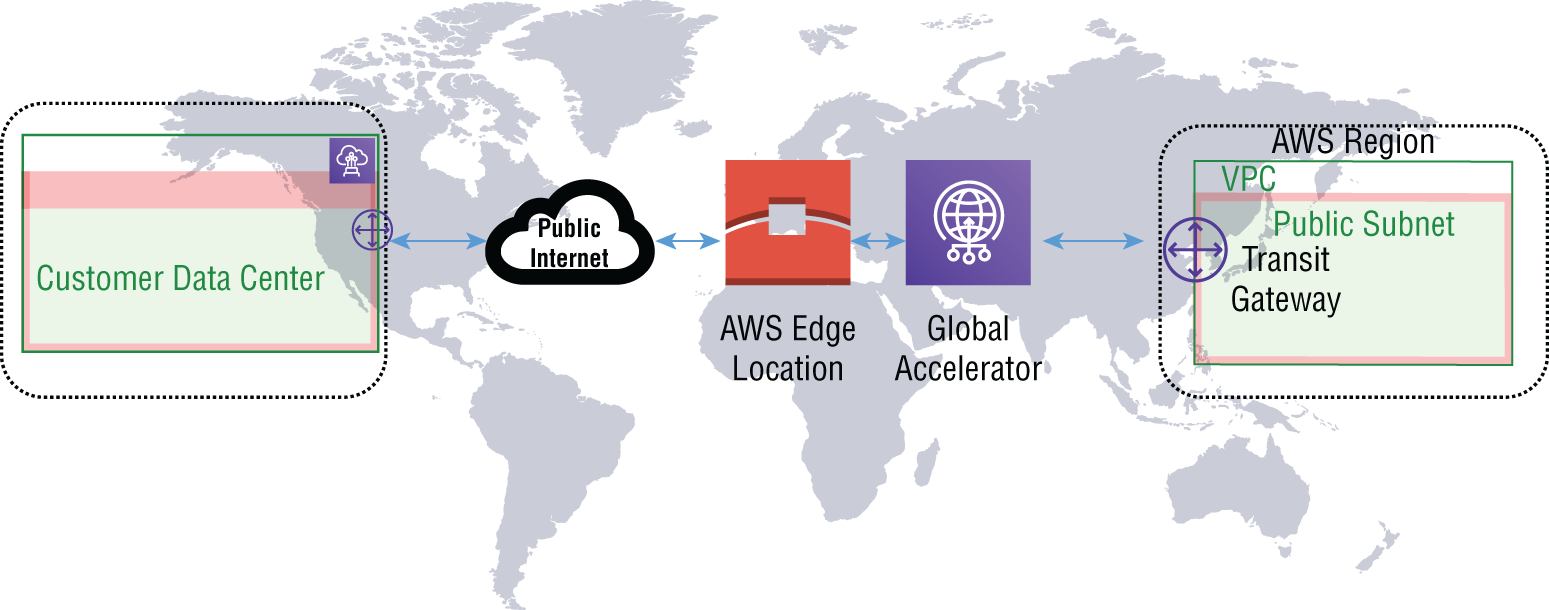

There is an option to use the AWS VPN Global Accelerator to decrease the network latency and congestion experienced when routing your VPN traffic over the public Internet. The AWS accelerated site-to-site VPN is routed to the nearest Global Accelerator edge location and then traverses the internal AWS global network instead of the Internet, as shown in Figure 7.2. For more information on the Global Accelerator, refer to Chapter 1, “Edge Networking,” or review the AWS web console at https://aws.amazon.com/global-accelerator.

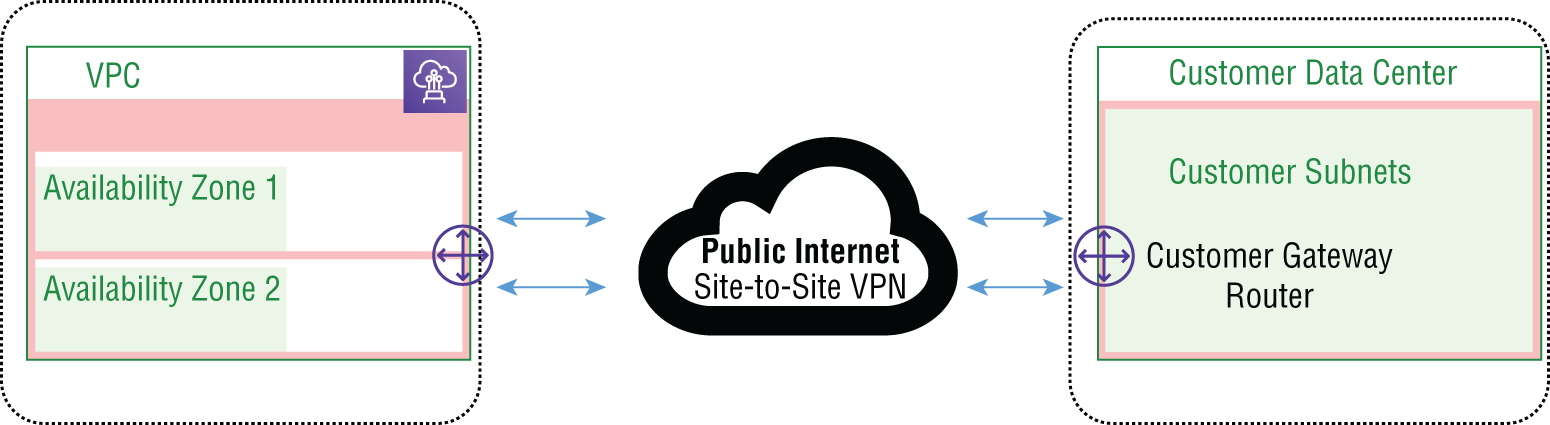

FIGURE 7.1 Dual tunnel site-to-site VPN

FIGURE 7.2 Accelerated site-to-site VPN

In this chapter, we will cover how the AWS site-to-site VPN service is implemented to provide for faster response times than traversing the public Internet. VPN traffic gets routed to the nearest AWS edge location from your customer gateway VPN termination appliance.

All VPN traffic will traverse the Internet by default and bypass the Global Accelerator. You must manually enable the service at the time you create the VPN or later after it has been set up, by creating a new connection. The VPN tunnel endpoint IP address will change to point to the Global Accelerator endpoints. To review its configuration, read the AWS online documentation for the steps to create the accelerated VPN at https://docs.aws.amazon.com/vpn/latest/s2svpn/create-tgw-vpn-attachment.html.

There are some limitations and restrictions that you must know about when architecting or operating the accelerated site-to-site VPN. Acceleration must use a Transit Gateway since virtual private gateways do not support accelerated VPN connections. Accelerated VPNs are not able to connect to DX public virtual interfaces.

On existing site-to-site VPN connections, you must create a new site-to-site VPN connection with acceleration enabled. You are not allowed enable or disable acceleration on an existing connection. Next, configure your customer gateway VPN appliance to use the new site-to-site VPN connection. After the new connection is established, delete the old site-to-site VPN connection. You must enable NAT traversal in the configuration on both ends of the VPN connection. To keep the accelerated tunnels up, the Internet Key Exchange (IKE) must be re-keyed at the customer end of the VPN.

In Chapter 6 we covered the interconnection hardware required at the AWS Direct Connect locations. For a detailed lesson on AWS Direct Connect, refer to Chapter 6.

The AWS network interface to your equipment in the DX facility uses single-mode fiber with SFP transceivers.

For 1 Gigabit connections, the 1000BASE-LX 1310 nm single-mode fiber SFP is used, for 10 Gigabit, we will use 10GBASE-LR 1310 nm SFPs, and for 100 Gigabit Ethernet, the 100GBASE-LR4 connectors are utilized.

In this section, you will learn about the physical interconnection at the DX facility. Autonegotiation must be disabled on your router’s interface connected to the AWS router when using port speeds higher than 1 Gbps. VLAN tagging using 802.1Q must be enabled. Also, there is jumbo frame support from 1,522 to 9,023 bytes.

Bidirectional forwarding is optional but enabled on the AWS end of the connection. This will remain inactive until AWS sees incoming BFD frames, and then the feature will automatically become enabled. The routing protocol used for peering with AWS is BGP with MD5 used for authentication. Both IPv4 and IPv6 protocols are supported.